To Err Is AI, To Verify Is Human: Why AI Outputs Still Need Human Judgment

Being a fond lover of stories and children’s books, I take every opportunity I get to read them. One of the things I enjoy most is the colourful, vibrant illustrations that bring stories to life.

Recently, while waiting at a café in Chennai, I picked up a magazine and started flipping through it. That’s when something felt off. The illustrations looked polished at first glance, but a closer look revealed strange inconsistencies— hands with seven fingers, heads turned at impossible angles, characters appearing to float mid-air.

This wasn’t a fantasy magazine. These were clear errors. And yet, they had made it to print.

What surprised me wasn’t that AI-generated images were used. That’s increasingly common. What was surprising was that the errors went unnoticed, unchecked, and ultimately published.

At a time when AI is becoming increasingly powerful and accessible, it’s worth reminding ourselves of a simple truth: AI can still be wrong.

What are AI hallucinations?

AI systems work by learning patterns from data. Their outputs are shaped by the data they are trained on and the context they are given. This is why the phrase “garbage in, garbage out” continues to hold true.

While AI developers have made significant progress in reducing errors, today’s models still make mistakes—sometimes even in simple scenarios. These mistakes can occur due to incorrect data, incomplete information, or missing context.

Systems amplify errors; they don’t suppress them

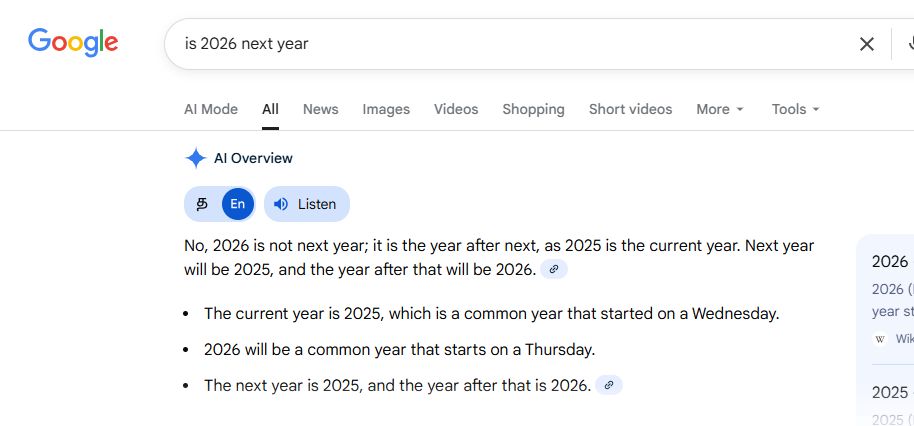

A well-known example surfaced recently when an AI system struggled to answer a seemingly straightforward question about the upcoming year. The confusion wasn’t due to malice or intent—it was a result of missing or misinterpreted context.

These confident but incorrect outputs are what we commonly refer to as AI hallucinations. This is also why almost every AI platform includes a disclaimer reminding users that the system can make mistakes and that outputs should be double-checked.

To err is AI

The magazine incident wasn’t an isolated case. Most of us have encountered AI-generated content that slipped through without review—an incorrect fact, a misplaced reference, an unedited prompt, or a subtle but serious error.

This raises an important question: when these mistakes occur, where does responsibility lie?

AI tools today offer an unprecedented ability to create—text, images, code, and ideas—within seconds. That power, however, comes with responsibility. Once AI generates an output, the task of verification shifts firmly to the human using it.

AI can assist, accelerate, and inspire. But it does not absolve us of judgement.

Human-in-the-loop: a lesson schools cannot ignore

This idea becomes especially important when we look at how AI is entering classrooms.

As AI literacy is introduced in schools and students increasingly use AI tools for learning, one of the most critical lessons they must learn is that AI is not infallible. Teaching students that AI can make mistakes encourages them to pause, question, and reflect—rather than accept outputs at face value.

(You may also want to read about ways to prevent students from using AI to finish assignments.)

Today’s students are growing up surrounded by AI systems. Helping them understand that AI outputs are shaped by data—and that data can be incomplete, biased, or outdated—reinforces the idea that AI is a human-made technology, not an all-knowing authority.

This awareness strengthens human agency. It builds critical thinking. And it becomes a cornerstone of meaningful AI literacy in schools.

(You can explore other essential elements of an effective AI curriculum here.)

Making errors visible, not invisible

At inAI, this principle is intentionally built into both the curriculum and the platform. Students are encouraged to see what happens behind the scenes—how models are trained, how outputs are formed, and where things can go wrong.

By making errors visible rather than hidden, students learn not just how to use AI, but how to think with it. They learn that verification is not an optional step—it is a responsibility.

As AI becomes more present in everyday learning, remembering this simple idea may matter more than ever:

To err is AI; to verify is human.